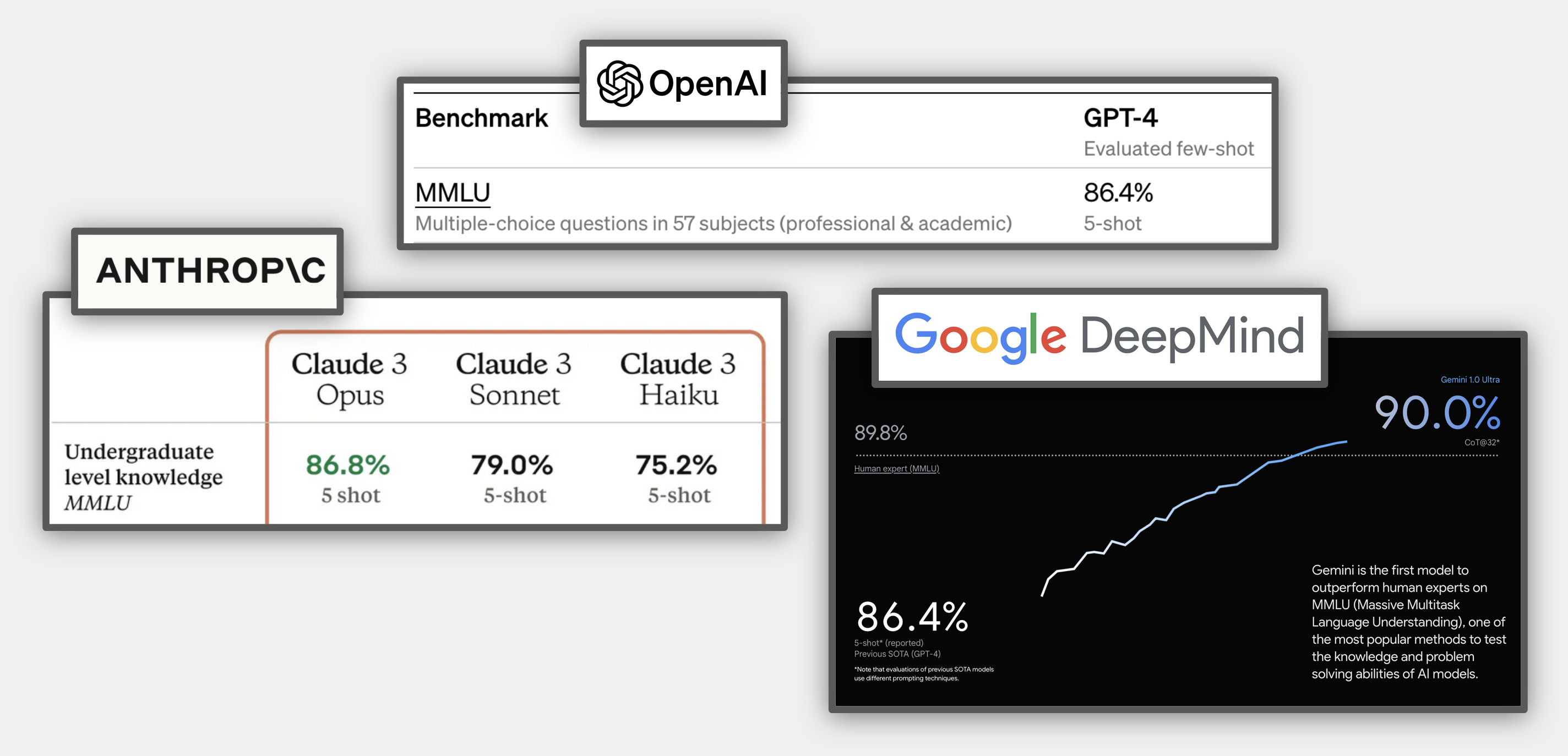

The Massive Multitask Language Understanding (MMLU) benchmark (Hendrycks et al., 2021 Hendrycks, D., Burns, C., Basart, S., Zou, A., Mazeika, M., Song, D. & Steinhardt, J. (2021). Measuring Massive Multitask Language Understanding. https://doi.org/10.48550/arXiv.2009.03300 ) is widely used to demonstrate state-of-the-art language model capabilities. Anthropic’s Claude 3, Google’s Gemini and OpenAI’s GPT-4 models were all introduced alongside prominently placed MMLU results. This publicity makes MMLU one of the most prominently discussed benchmarks for language models. Despite the benchmark’s prominence, the exact model capabilities evaluated and evaluation methods are less widely known. In this blog post, I aim to give a short overview of the MMLU benchmark.

Screenshots of large model providers prominently sharing MMLU results in recent model releases. Taken from official websites discussing model releases of Anthropic’s Claude 3, Google’s Gemini and OpenAI’s GPT-4.

Evaluated model capabilities

As the name suggest, the “massive” MMLU Benchmark was created to evaluate language models’ capabilities on a wider range of tasks than previously available benchmarks such as SuperGLUE (Wang et al., 2019 Wang, A., Pruksachatkun, Y., Nangia, N., Singh, A., Michael, J., Hill, F., Levy, O. & Bowman, S. (2019). SuperGLUE: A Stickier Benchmark for General-Purpose Language Understanding Systems. Curran Associates, Inc.. Retrieved from https://proceedings.neurips.cc/paper/2019/hash/4496bf24afe7fab6f046bf4923da8de6-Abstract.html ). To this end, MMLU’s focus is on diverse knowledge tasks spanning from STEM topics (such as abstract algebra) to humanities subjects (such as world religions). Below is the complete set of 57 categories covered by MMLU as listed in the paper:

Abstract Algebra, Anatomy, Astronomy, Business Ethics, Clinical Knowledge, College Biology, College Chemistry, College Comp Sci, College Mathematics, College Medicine, College Physics, Computer Security, Conceptual Physics, Econometrics, Electrical Engineering, Elementary Mathematics, Formal Logic, Global Facts, High School Biology, High School Chemistry, High School Comp Sci, High School European History, High School Geography, High School Gov’t and Politics, High School Macroeconomics, High School Mathematics, High School Microeconomics, High School Physics, High School Psychology, High School Statistics, High School US History, High School World History, Human Aging, Human Sexuality, International Law, Jurisprudence, Logical Fallacies, Machine Learning, Management, Marketing, Medical Genetics, Miscellaneous, Moral Disputes, Moral Scenarios, Nutrition, Philosophy, Prehistory, Professional Accounting, Professional Law, Prehistory, Professional Accounting, Professional Law, Professional Medicine, Professional Psychology, Public Relations, Security Studies, Sociology, US Foreign Policy, Virology, and World Religions

Evaluation methods

The MMLU benchmark consists entirely of multiple-choice questions. Each question comes with four possible answers (A, B, C, or D). Thus, a fully random model would in expectation still score 25% accuracy on the benchmark. The authors report that non-expert Amazon Mechanical Turk workers achieve a 34.5% accuracy on the test. Further, the authors estimate that expert human performance on the entire benchmark is approximately an accuracy of 89.8%.

MMLU’s main test set contains 14,079 multiple-choice questions, with at least 100 questions for each category above. All questions were manually collected by undergraduate and graduate students from materials publicly available online, such as exams and exam preparation guides.

As is common practice, a GPT-style language model’s “choice” is determined by putting a prompt to answer a question from the benchmark in the model’s context. Then, the probabilities for the next token are computed and the probabilities of the tokens for the possible answers selected. The token with the highest probability under the prompted model is considered the model’s choice.

Example tasks

The following example tasks are taken from the MMLU paper (Hendrycks et al., 2021 Hendrycks, D., Burns, C., Basart, S., Zou, A., Mazeika, M., Song, D. & Steinhardt, J. (2021). Measuring Massive Multitask Language Understanding. https://doi.org/10.48550/arXiv.2009.03300 ).

Microeconomics example question

One of the reasons that the government discourages and regulates monopolies is that

- (A) producer surplus is lost and consumer surplus is gained.

- (B) monopoly prices ensure productive efficiency but cost society allocative efficiency.

- (C) monopoly firms do not engage in significant research and development.

- (D) consumer surplus is lost with higher prices and lower levels of output.

Question taken from Figure 3 of paper with some formatting added. Correct answer is (D).

College mathematics example question

In the complex z-plane, the set of points satisfying the equation z2 = |z|2 is a

- (A) pair of points

- (B) circle

- (C) half-line

- (D) line

Question taken from Figure 4 of paper with some formatting added. Correct answer is (D).

Professional medicine example question

A 33-year-old man undergoes a radical thyroidectomy for thyroid cancer. During the operation, moderate hemorrhaging requires ligation of several vessels in the left side of the neck. Postoperatively, serum studies show a calcium concentration of 7.5 mg/dL, albumin concentration of 4 g/dL, and parathyroid hormone concentration of 200 pg/mL. Damage to which of the following vessels caused the findings in this patient?

- (A) Branch of the costocervical trunk

- (B) Branch of the external carotid artery

- (C) Branch of the thyrocervical trunk

- (D) Tributary of the internal jugular vein

Question taken from Figure 5 of paper with some formatting added. Correct answer is (C).

Limitations

As with any ML benchmark, results reported on MMLU should be considered with caution. In particular, the benchmark may have been quite extensively overfitted to. Firstly, it is easily available online and would – unless appropriately decontaminated – automatically form part of pre-training data scraped from the internet. Secondly, even if the benchmark data is not directly leaked into training data: MMLU is clearly a metric that is being tracked (and likely optimised for) by all state-of-the-art model developers. As shown above all major developers have released materials reporting their models’ performance on MMLU. See my blog post on benchmark problems for further discussion of overfitting and related benchmark problems that may apply to MMLU.

Further resources

The code for reproducing the paper’s results was published on GitHub, including instructions on downloading the MMLU dataset. Interesting follow-up work includes the Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark (Yue et al., 2023 Yue, X., Ni, Y., Zhang, K., Zheng, T., Liu, R., Zhang, G., Stevens, S., Jiang, D., Ren, W., Sun, Y., Wei, C., Yu, B., Yuan, R., Sun, R., Yin, M., Zheng, B., Yang, Z., Liu, Y., Huang, W., Sun, H., Su, Y. & Chen, W. (2023). MMMU: A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI. https://doi.org/10.48550/arXiv.2311.16502 ), abbreviated as MMMU.

References

- Hendrycks, D., Burns, C., Basart, S., Zou, A., Mazeika, M., Song, D. & Steinhardt, J. (2021). Measuring Massive Multitask Language Understanding. https://doi.org/10.48550/arXiv.2009.03300

- Wang, A., Pruksachatkun, Y., Nangia, N., Singh, A., Michael, J., Hill, F., Levy, O. & Bowman, S. (2019). SuperGLUE: A Stickier Benchmark for General-Purpose Language Understanding Systems. Curran Associates, Inc.. Retrieved from https://proceedings.neurips.cc/paper/2019/hash/4496bf24afe7fab6f046bf4923da8de6-Abstract.html

- Yue, X., Ni, Y., Zhang, K., Zheng, T., Liu, R., Zhang, G., Stevens, S., Jiang, D., Ren, W., Sun, Y., Wei, C., Yu, B., Yuan, R., Sun, R., Yin, M., Zheng, B., Yang, Z., Liu, Y., Huang, W., Sun, H., Su, Y. & Chen, W. (2023). MMMU: A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI. https://doi.org/10.48550/arXiv.2311.16502

Citation

If you found this post useful for your work, please consider citing it as:

orFindeis, Arduin. (Mar 2024). MMLU benchmark overview. Retrieved from https://arduin.io/blog/mmlu-overview/.

@article{Findeis2023MMLUbenchmarkoverview,

title = "MMLU benchmark overview",

author = "Findeis, Arduin",

journal = "arduin.io",

year = "2024",

month = "March",

url = "https://arduin.io/blog/mmlu-overview/"

}